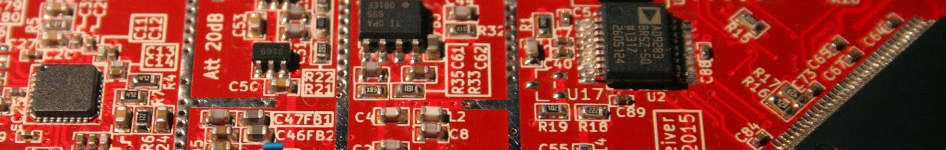

The Raman spectrometer we are developing has a high-resolution light microscope. For uneven surfaces, though, the small depth of focus often makes it hard to recognize what’s going on, since only a small part of the image is actually in focus, like this:

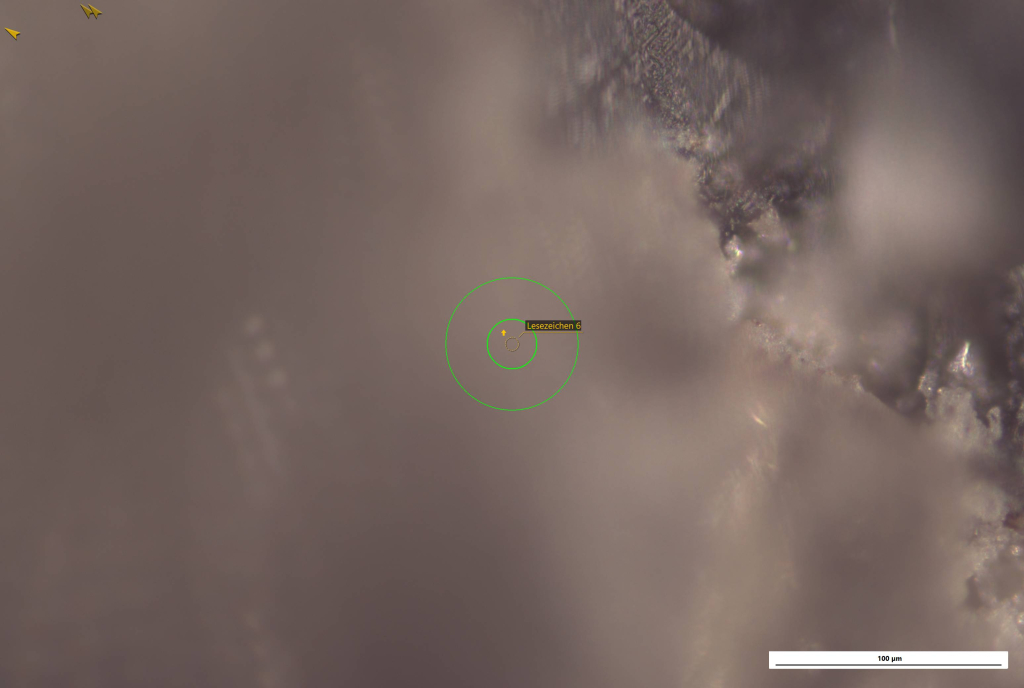

Since we can automatically adjust the focus, though, I recently developed a feature which creates a stack of images at different focal points and stitches the sharp areas together to obtain a fully sharp image.

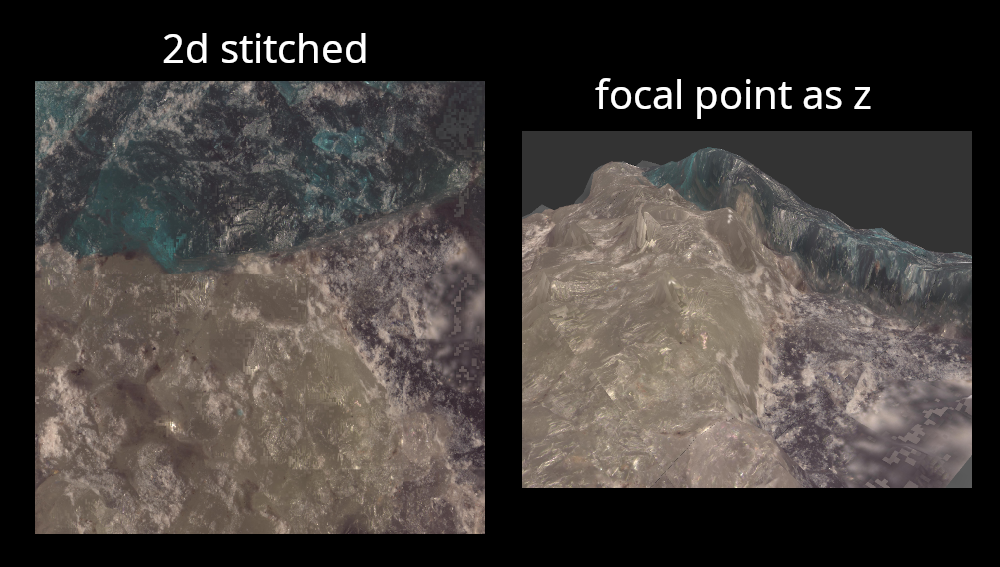

Curiously, though, the small depth of focus has an interesting property: it gives you a very good idea of where exactly the focus point is for each area of the image. This information can be used to obtain a z coordinate for each area, and create a rough 3D model.

Of course, I want to show this 3D image to the user. This isn’t super hard to do using OpenGL, so I’m using QOpenGLWidget to create this visualisation.

But… we need camera navigation

Of course, the user wants to view this model from different angles (that’s kind of the point). So we need camera navigation. This is surprisingly tricky to get right, and since this isn’t the first time I spend quite some frustration on figuring out a well-working solution, I’ll document it here now.

The first thing to realize is that we don’t want to work with the 4×4 modelview matrix as our data structure. It’s really mind-warping to extract meaningful information from it. Instead, we choose the following data structure:

std::optional<QVector2D> mousePressPosition;

struct {

QVector3D focusPoint = {0, 0, 0};

QVector3D cameraLocation = {0, 0, -2};

QVector3D up = QVector3D::crossProduct({-1, 0, 0}, cameraLocation - focusPoint).normalized();

} camera;The mousePressPosition contains the position of the last processed mouse event in screen coordinates.

The camera struct contains the focal point, which is where the camera is looking at; the camera location, which is, well, where the camera is located; and the orientation of the camera in terms of its “up” vector, i.e. the direction of the y axis on the screen in scene coordinates. The complicated initialization here enforces that it is always orthogonal to the view direction and normalized (which is anyways the case for {-1, 0, 0}, but like this you can easily change the numbers without worrying).

This forms a very intuitive coordinate system for our camera: the axes are the directions we are looking in (the “focus vector”, which is the vector from camera position to focus point), the up direction on the screen, and the right direction on the screen (easily obtained by computing the cross product of the focus vector and the up direction). The origin is the focus point.

From these data, it is now quite simple to obtain the modelview matrix:

QMatrix4x4 MyGLWidget::currentMatrix() const

{

QMatrix4x4 viewMatrix;

viewMatrix.lookAt(camera.cameraLocation, camera.focusPoint, camera.up);

return viewMatrix;

}Now, let’s move it

On to interactivity! First, we need to book-keep whether a mouse button is down:

void MyGLWidget::mousePressEvent(QMouseEvent* e)

{

mousePressPosition = QVector2D(e->localPos());

}

void MyGLWidget::mouseReleaseEvent(QMouseEvent*)

{

mousePressPosition.reset();

}Simple enough. Then, we need to react when the mouse moves:

void MyGLWidget::mouseMoveEvent(QMouseEvent* e)

{

if (!mousePressPosition) {

return;

}

auto const pos = QVector2D(e->localPos());

auto const diff = pos - *mousePressPosition;

auto const focusVector = camera.cameraLocation - camera.focusPoint;

auto const up = camera.up.normalized();

auto const right = QVector3D::crossProduct(camera.up, focusVector).normalized();

if (e->buttons() & Qt::LeftButton) {

// rotate

QMatrix4x4 mat;

mat.rotate(-diff.x(), up);

mat.rotate(-diff.y(), right);

auto const newFocusVector = mat.map(focusVector);

auto const newCameraPos = newFocusVector + camera.focusPoint;

camera.cameraLocation = newCameraPos;

camera.up = mat.mapVector(up);

// Ensure the "up" vector is actually orthogonal to the focus vector. This is approximately

// the case anyways, but might drift over time due to float precision.

camera.up -= QVector3D::dotProduct(camera.up, newFocusVector) * camera.up;

camera.up.normalize();

}

if (e->buttons() & Qt::RightButton) {

// pan

auto const panDelta = -diff.x() / width() * right + diff.y() / height() * up;

camera.cameraLocation += panDelta;

camera.focusPoint += panDelta;

}

mousePressPosition = QVector2D(e->localPos());

update();

}First, we compute the coordinate system as described above from our camera state.

Then, for rotation mode (left button, here), we rotate our focus vector (i.e. the “non-normalized view direction”) around our up and right axes, for x and y mouse movement respectively. Since we want the rotation of the camera to happen around the focus point (this feels very intuitive), we do not move the focus point, but instead change the camera position to be the old focus point plus the new focus vector. Finally, as book-keeping, we also rotate the “up” vector of the camera to match the new position. We then do subtract a Gram-Schmidt-like term from it to ensure it stays orthogonal to the view direction without any potential drift.

For pan mode (right button, here), things are somewhat simpler: we simply move the camera location and the focus point in the direction of the right and up vectors of our coordinate system, based on the x and y delta of the mouse movement.

Finally, we remember the new mouse position (all changes from the old delta were applied), and schedule a repaint for our widget.

Add some zoom, and done!

Zooming is a lot simpler, fortunately:

void MyGLWidget::wheelEvent(QWheelEvent* event)

{

auto const delta = event->angleDelta().y();

auto const focusVector = camera.cameraLocation - camera.focusPoint;

auto const mul = -delta / 1000.;

camera.cameraLocation += mul*focusVector;

update();

}We simply shorten or lengthen the focus vector, thus moving the camera towards or away from the focus point, depending on the wheel movement.

That’s it! Hope it helps you.

- Let’s play Dominion — how about 20.000 games a second?

- Changing a broken Horizontal adjustment knob on a Rigol DS2202 oscilloscope

Categories: Everything